1. Issues with the spread of artificial intelligence (AI)

Just as new technologies such as the Internet and smartphones rapidly and conveniently transformed our daily lives and society, they also brought about new problems. Similarly, the use of artificial intelligence in various aspects of society raises concerns about potential risks and issues. Many of these problems are social and ethical in nature and cannot be resolved solely by enhancing or introducing new technologies. There are instances where users exploit AI technology for malicious purposes, or where AI makes anti-social and anti-humanitarian decisions that create social chaos.

AI accident cases

-

Accident case 1: A U.S. lawyer submits fabricated legal precedents suggested by ChatGPT

A lawyer in the United States submitted a legal brief to the court containing precedents proposed by ChatGPT. However, it was revealed that all the referenced precedents were fake. Despite repeatedly questioning ChatGPT about the authenticity of the precedents, it consistently claimed they were legitimate ('23.5).

- Implications

- Concerns arising from hallucinations caused by generative artificial intelligence.

-

Accident case 2: AI chatbot creates fake interview article

A German weekly magazine, utilizing an artificial intelligence chatbot, crafted a fake interview article featuring a renowned racing athlete whom they had never actually interviewed ('23.4).

- Implications

- The use of artificial intelligence to fabricate and disseminate false information, leading to social disruption.

-

Accident case 3: Accident during the chess game

The robot broke the finger of a 7-year-old boy during a chess game for violating the safety rule at the Moscow chess tournament (July 2022).

- Implications

- Occurrence of a safety accident caused by the hardware linked with AI

-

Accident case 4: Demand for disclosing the dispatching algorithm of the delivery platform

Delivery men claimed that the delivery platform's AI algorithm causes problems with labour control and unfair delivery fees (June 2021).

- Implications

- Users raising questions about the reasoning results of AI

2. Concept of AI trustworthiness

As demonstrated in previous cases, AI products and services must be evaluated not only from a technical perspective of 'can it be implemented?', but also from an ethical standpoint of 'is it appropriate for this product or service to existing?'. In particular, as AI is employed in various fields, using it without acknowledging ethical flaws in its system and learning models can cause a significant ripple effect. 'AI trustworthiness' refers to the set of value standards that must be followed to address data and model bias, inherent risks, and limitations of AI, and to prevent unintended consequences in its deployment and dissemination. Major international organizations are engaging in active discussions on the key elements required to ensure AI trustworthiness. In general, safety, explainability, transparency, robustness, and fairness are identified as essential components for achieving trustworthiness.

Key attributes and meaning of AI trustworthiness

| Key attributes | Meaning |

|---|---|

| Safety | A state in which the possible risk to humans and the environment is reduced or eliminated when the system is operated or functioned as the result of AI’s judgment and prediction. |

| Explainability | A comprehensible state in which the basis of AI’s judgment and prediction and the process leading to the result are presented in a way that humans can understand, or the cause of a problem can be traced. |

| Transparency | A state in which AI’s decision-making results are explainable or the basis of which is traceable, and the information about the purpose and limitations of AI can be delivered to users in an appropriate way. |

| Robustness | A state in which AI maintains the performance and functions at the level intended by the user even with external interference or extreme operating environments. |

| Fairness | A state in which AI does not show discrimination or bias against specific groups when processing data, or does not reach conclusions including discrimination and bias. |

※ Privacy, sustainability, etc. are also being discussed as key attributes.

- CautionThe concept of AI trustworthiness being discussed by major institutions

- (International Organization for Standards, ISO) Suggesting availability, resilience, security, privacy, safety, responsibility, transparency, integrity, etc. as detailed attributes (ISO/IEC TR 24028: 2020)

- (Organization for Economic Cooperation and Development, OECD) AI with transparency, explainability, robustness, and safety in line with sustainable society and human-centred values (2019)

- (National Institute of Standards and Technology, NIST) A goal that must be met when using AI for social benefits and economic growth, and a concept that includes explainability, safety, security, etc. (2020)

- (European Commission, EC) The use and operation of AI must be legal, ethical, and technologically and socially sound (2019)

3. Domestic and international AI trustworthiness policies and research trends

Major countries like the EC and the US define the security of AI trustworthiness as a prerequisite for social and industrial acceptance and development of AI, and promoting policies for securing trustworthiness. in addition, both the industrial and academic worlds are actively researching ways to secure trustworthy are centering on the development of related technologies. Specifically, major countries such as the EC and the US are preparing policies and standards needed to secure AI Trustworthiness at full scale, and specified Trustworthy AI and Safe AI as the key factor in national-level AI strategies The EC in particular is taking steps toward legislation to proactively secure trustworthiness by proposing regulation in 2021. Meanwhile, in the private sector, efforts are made to create an environment to autonomously check and secure trustworthiness of AI by preparing guidelines for securing AI trustworthiness. In the field of technology, academics and global companies of major countries in the US and Europe are developing various technologies required to secure AI trustworthiness. Korea is also moving quickly in both policy and R&D by announcing "Artificial Intelligence (AI) Ethical Standards (December 2012)" and "Strategy for Realizing Trustworthy AI (May 2021)" to keep up with global trends.

Policies and trends related to AI trustworthiness in major countries

| Country | Major policies (Year) | Characteristics |

|---|---|---|

| Korea |

|

Promoting comprehensive policies such as the establishment of an AI ecosystem, talent training, industrial expansion, and prevention of dysfunctions, ethically human-centered AI as the base value |

| European Commission |

|

Promoting balanced AI policies such as human-centered values, ethics, and security |

| UNESCO |

|

The international guidelines for ethics of artificial intelligence unanimously adopted by 193 member countries of UNESCO |

| The US |

|

Focusing on the development and support of artificial intelligence technologies, and deregulation policies for the use and promotion of artificial intelligence by the industry |

| China |

|

Promoting corporate-friendly policies such as large-scale investments led by the government, powerful talent training, data open sharing, etc. |

| Japan |

|

Comprehensive approach from the perspectives of economy, industry, society, ethics, etc. |

| Singapore |

|

Proposing guidelines for actions based on explainability, transparency, fairness, and human-centered principles for each of the four key areas |

Research on AI trustworthiness by major overseas industries, universities, and institutions

| Institution | Activities and details |

|---|---|

| Defense Advanced Research Projects AgencyDARPA | Conducting projects including research on securing the safety and trustworthiness of intelligent systems (Assured Autonomy) and R&D of explainable artificial intelligenceXAI, eXplainable AI |

| National Institute of Standards and TechnologyNIST | Developing an artificial intelligence risk management framework that can be used by hands-on workers in collaboration with global companies and research institutes |

| Stanford University | Disclosing the ‘AI Index’, containing the level and trends of AI technology each year and conducting research related to AI safety |

| IBM | Disclosing internal work guidelines and white papers, and developmental verification tools to ensure fairness, explainability, and robustness under the motto of ‘Trusted AI’ |

| Microsoft | Disclosing internal work guidelines and white papers, and development verification tools to ensure fairness, explainability, and transparency under the motto of ‘Responsible AI’ |

| Disclosing guidelines and tools for establishing principles for the development of ‘Responsible AI’ and checking and the verification of trustworthiness |

Trustworthiness Verification

Many domestic and international institutions and companies have released ethical principles, instructions, and guidelines to secure AI trustworthiness, but there has been no case that proposed a detailed methodology from a technical point of view. Therefore, we developed items that can be checked by stakeholders such as data scientists and model developers to ensure trustworthiness in the AI product and service development field. The process of development is as follows.

1. Design elements of development guidelines (AI service composition, life cycle, trustworthiness requirements)

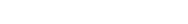

During the development process, we explored which factors should be taken into account the most in work to ensure trustworthiness and identified three design elements to develop requirements and verification processes. Each design element was reflected in the preparation of requirements and verification items, and this approach was systematized in a matrix form as shown in the figure below and defined as the ‘AI Reliability Framework’.

AI trustworthiness framework

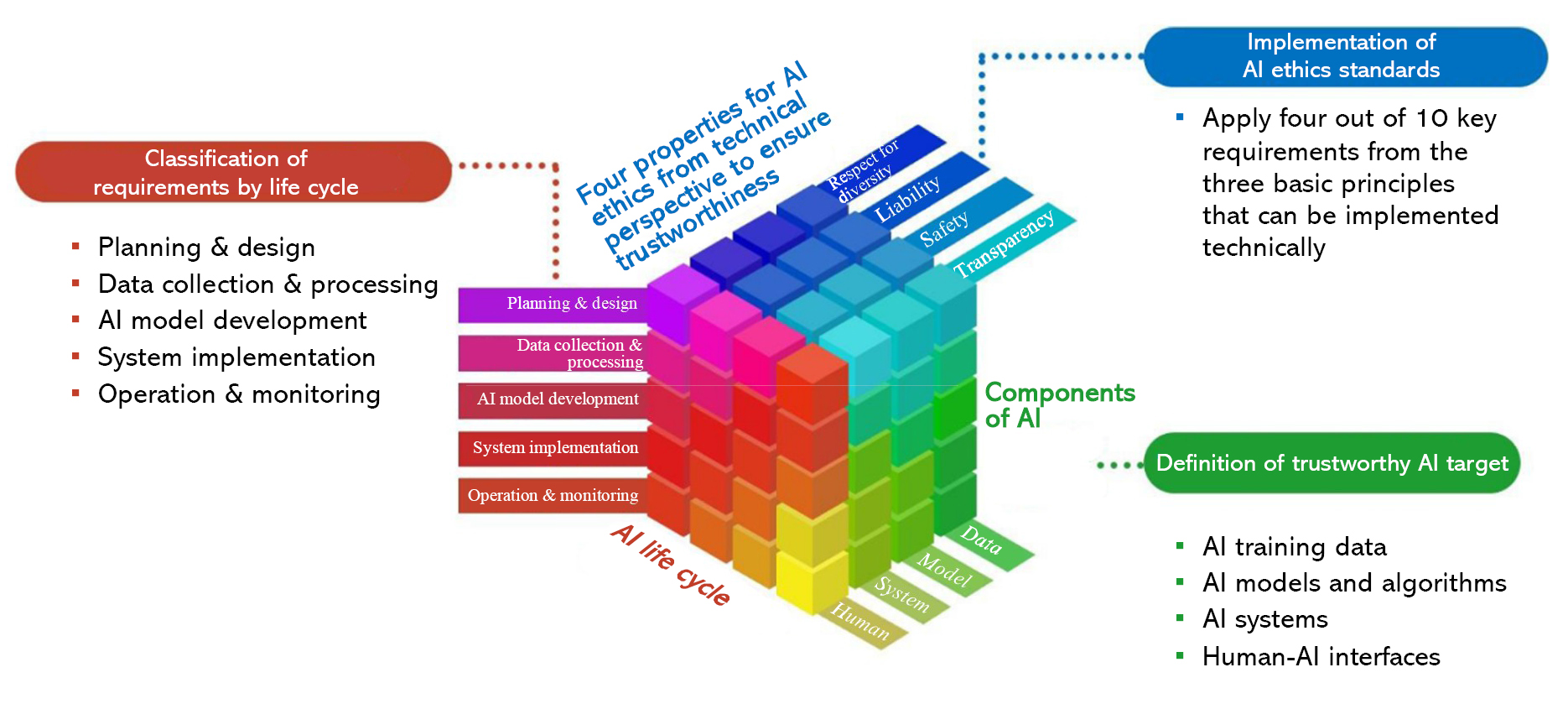

The first elementis the AI components. The four components of artificial intelligence are for AI learning, AI models and algorithms that perform learning and reasoning, a system which implements actual functions, and interfaces to interact with users. Each component is individually or collectively developed, verified, and operated according to the life cycle of AI services. Therefore, we tried to find ways to secure trustworthiness for each component, and present requirements and verification items for each component. The method of ensuring trustworthiness for each element is as follows.

AI service components

| AI service components | Trustworthiness securement method |

|---|---|

| AI learning data | Verifying whether bias and fairness have been excluded from the data used in the AI learning and reasoning process |

| AI models and algorithms | Verifying whether AI derives safe results based on models and algorithms and whether it is explainable and robust against malicious attacks |

| AI systems | Verifying whether the entire system applied with AI models and algorithms operates reasonably, and whether there are countermeasures in case of wrong reasoning by AI |

| Human-AI interface | Verifying whether AI system users and operators can easily understand the operation of the AI system, and whether AI notifies people or transfers control in case of malfunction |

Secondly, the AI service life cycle refers to a series of procedures for implementing and operating the AI service components discussed in the first section. It is similar to the engineering process or life cycle handled by existing software systems, but separate data processing and model development stages are required due to the characteristics of AI, and the definition of key activities may vary in other stages. Currently, the life cycle of AI or AI services is divided into 6 to 8 stages in many references. Representative examples are the life cycles presented by OECD and ISO/IEC. AI TrustOps referred to the life cycles presented by these two organizations as representative cases and organized the nature and activities of each life cycle stage into five stages as follow without distorting them to allow hands-on workers to easily utilize them.

Main activities by life cycle of AI services

| Life cycle stages | Main activities |

|---|---|

| 1. Planning and design |

|

| 2. Collection and handling of data |

|

| 3. Development of AI models |

|

| 4. System implementation |

|

| 5. Operation and monitoring |

|

The stages of the life cycle of AI services have a repetitive and circulatory nature but are not necessarily sequential. Therefore, the life cycle has been described sequentially from sstages1 to 5 to help to understand, but the order may change in the process of collecting and processing actual data or developing and operating models.

Third, to define the requirements for artificial intelligence reliability, the 10 core requirements of the 'Artificial Intelligence Ethical Standards' are applied mutatis mutandis, and the requirements and verification items necessary from a technical point of view include 'respect for diversity', 'responsibility', 'safety', 'Transparency' was derived.

International organizations such as the EC, OECD, IEEE, and ISO/IEC subdivide and present sub-attributes of artificial intelligence reliability. In particular, ISO/IEC 24028:2020 provides keywords in the form of considerations necessary to ensure reliability. These include transparency, controllability, robustness, recoverability, fairness, safety, privacy, security, etc., but the relationship between keywords or correlation with reliability is not defined. Like this, terms that look similar but slightly different depending on the point of view are defined differently in various works of literature, and there is no agreed-upon attribute classification or definition yet. Accordingly, the attributes and keywords presented by various organizations such as the EC, OECD, IEEE, and ISO/IEC mentioned above were comprehensively analyzed, and opinions of domestic experts in academia, research, and industry were collected to seek consensus. After deriving the reliability attributes of artificial intelligence through such a wide-ranging process of sharing opinions, the final selection of the requirements to be dealt with in terms of technology was made by matching them to the 10 requirements of the national artificial intelligence ethical standards. The definition of each requirement is as follows.

AI trustworthiness requirements

| Trustworthiness requirements | Definition |

|---|---|

| Respect for diversity | AI does not learn or output results from discriminatory and biased practices against specific individuals or groups, and all people can benefit from AI technologies equally regardless of characteristics such as race, gender, age, etc.

|

| Responsibility | There is a mechanism that ensures AI is accountable for reasoning results throughout its life cycle.

|

| Safety |

|

| Transparency | Humans can understand and reason the results reasoned by AI, and know that the result was reasoned by AI.

|

As above, there are various attributes to secure AI trustworthiness, and it is important to consider not only the definition of each attribute but also the mutual dependence between trustworthiness attributes. For example, excessive transparency requirements for AI services can lead to privacy-related risks. In addition, explainability alone is insufficient to ensure transparency, but explainability is one of the important factors in ensuring transparency. Therefore, it is important to provide AI services based on a sufficient understanding of the AI trustworthiness attributes, and continuously review whether the AIservices are properly performed based on the attributes applied.

2. Derivation of AI trustworthiness requirements and verification items

We derived detailed requirements and verification items next. First of all, technical requirements were derived and specified based on policies, recommendations, and standards for securing AI trustworthiness released by standards organizations, technical organizations, international organizations, and major countries. In addition, we reviewed the checklists announced to secure AI trustworthiness in Korea, such as the Artificial Intelligence (AI) Personal Information Self-Checklist (May 2021) and Guidelines on Artificial Intelligence in Financial Services (July 2021). In the review process, the necessary contents of the development guidelines were reflected, and redundant contents were removed or integrated. References are as follows.

References related to AI trustworthiness

| Institution | Publication date | Recommendation and standard |

|---|---|---|

| Korea | Nov. 2020 | National Artificial Intelligence (AI) Ethics Standards |

| European Commission | Jul. 2020 | The Assessment List for Trustworthy Artificial Intelligence |

| UNESCO | Nov. 2021 | Recommendation on The Ethics of Artificial Intelligence |

| International Organization for Standards (ISO/IEC) |

Nov. 11 | ISO/IEC TR 24027:2021, Information technology - Artificial Intelligence (AI) - Bias in AI systems and AI aided decision making |

| Mar. 2021 | ISO/IEC TR 24029-1:2021, Artificial Intelligence (AI) - Assessment of the robustness of neural networks - Part 1: Overview | |

| Jan. 2021 | ISO/IEC 23894, Information Technology - Artificial Intelligence Risk Management (in development) | |

| May 2020 | ISO/IEC TR 24028:2020, – AI - Overview of Trustworthiness in artificial intelligence | |

| National Institute of Standards and Technology (NIST) |

Aug. 2022 | Risk Management Framework: Second Draft |

| World Economic Forum (WEF) |

Jan. 2020 | Companion to the Model AI Governance Framework |

| Organization for Economic Cooperation and Development (OECD) |

May 2019 | Recommendation of the Council on Artificial Intelligence |

| May 2019 | People + AI guidebook | |

| European Telecommunications Standards Institute (ETSI) |

Mar. 2021 | Securing Artificial Intelligence (SAI 005) - Mitigation Strategy Report |